📊 Key Statistics

Contextual aggregate metrics.

- First indexing window for quality new URLs: 1–7 days.

- Strong internal hub linking can cut discovery time ~30%.

- 15–25% of young pages may lag due to duplication.

- Reducing redirect chains can speed re-crawl up to 20%.

Search engine crawlers are the lifeblood of organic visibility, yet their resources are finite. Inefficient crawling wastes your "crawl budget," delaying indexation and hindering rankings, particularly for large or rapidly changing websites. Optimizing crawl budget ensures search engines prioritize your most valuable content, leading to faster discovery and improved search performance. Focus on efficiency; don't let valuable pages languish unseen.

💬 Expert Insight

"Cutting noisy duplication reallocates crawl budget almost automatically."

⚙️ Overview & Value

Micro Variations: ⚙️ Overview & Value [1]

- Refresh cycle (refresh): Semantic delta (title/intro) + modified date triggers revisit.

- API method (channel): Use Indexing API for high-priority URLs when sitemap lag exists.

- Automation (automation): Scheduled submission plus status logging.

- Zero budget (cost): Architect internal links & clear sitemap priority.

- Low crawl budget (crawl): Flatten redirects, unify canonical hints.

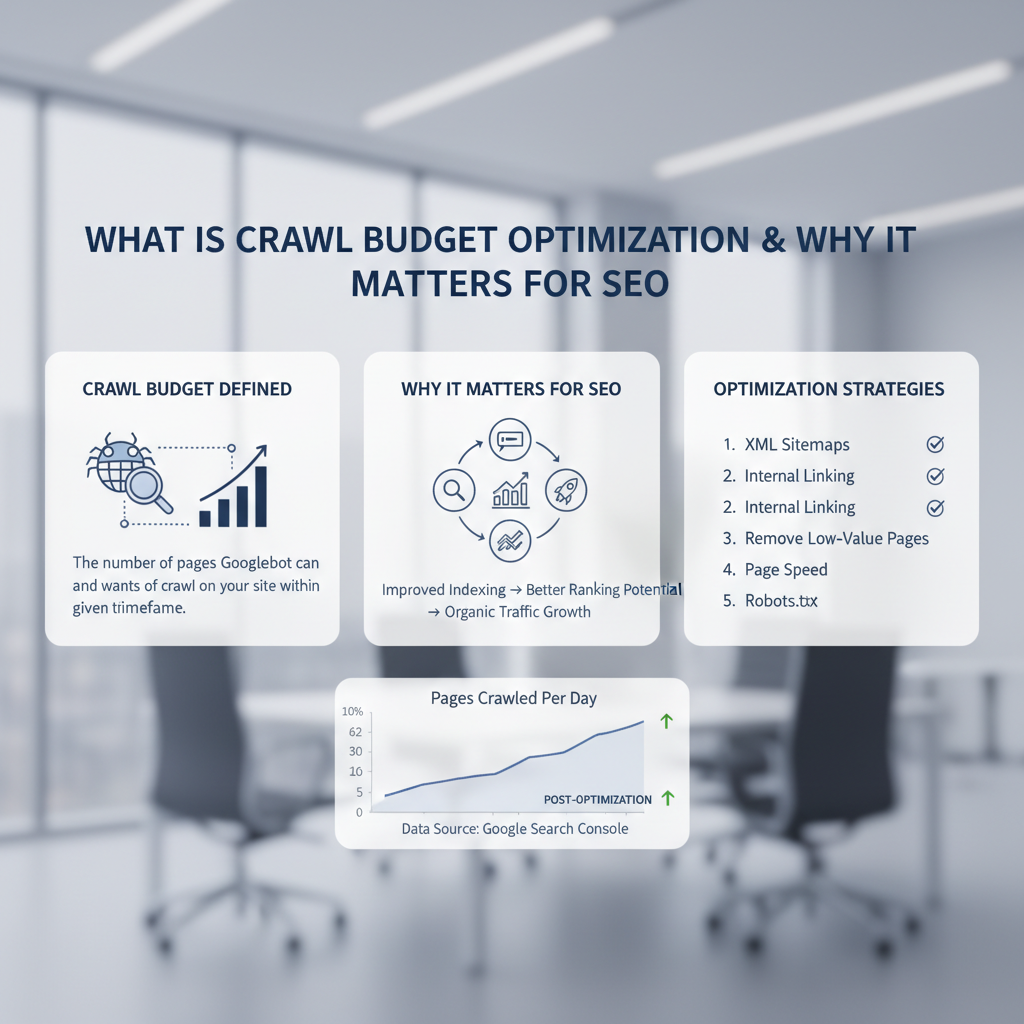

Crawl budget optimization is the process of making your website as easily and efficiently crawlable as possible for search engine bots. It ensures that Googlebot, and other crawlers, spend their limited time indexing your most important pages, avoiding wasted resources on low-value or redundant content. Effective crawl budget management directly impacts indexation speed, keyword rankings, and overall organic traffic.

Key Factors

- Prioritize high-value URLs: Focus crawler attention on pages that drive conversions or provide crucial information.

- Reduce unnecessary redirects: Minimize redirect chains to conserve crawl budget and improve page load speed.

- Eliminate duplicate content: Implement canonical tags to consolidate ranking signals and prevent crawler confusion.

- Improve site speed: Faster loading pages allow crawlers to process more content within their allocated time.

- Optimize internal linking: Guide crawlers to important pages through a clear and logical internal link structure.

- Manage faceted navigation: Control how crawlers access faceted navigation to avoid creating an overwhelming number of URLs.

❗ Common Pitfalls

Micro Variations: ❗ Common Pitfalls [4]

- Automation (automation): Scheduled submission plus status logging.

- API method (channel): Use Indexing API for high-priority URLs when sitemap lag exists.

- Delay issues (issue): Audit logs for soft 404 loops & latency spikes.

- Small site (scale): Merge thin pages; tighten taxonomy; reduce tag bloat.

- Large numbers of low-quality pages: Crawl budget wasted on irrelevant content → Indexation delays for important pages / Reduced overall site authority. Solution: Implement noindex tags or consolidate content. Success: Increased crawl rate of valuable pages.

- Broken links and redirects: Crawler gets stuck in loops → Wasted crawl budget / Poor user experience. Solution: Regularly audit and fix broken links. Success: Reduced 404 errors in Google Search Console.

- Duplicate content issues: Crawler indexes multiple versions of the same content → Diluted ranking signals / Crawler confusion. Solution: Implement canonical tags. Success: Consolidated ranking signals for preferred URL.

- Slow page load speed: Crawler spends more time downloading each page → Reduced number of pages crawled per session. Solution: Optimize images and code. Success: Improved page load speed and crawl rate.

- Poor internal linking structure: Crawler struggles to find important pages → Reduced indexation of valuable content. Solution: Improve internal linking. Success: Increased crawl depth.

- Ignoring server log analysis: Missed opportunities to identify crawl inefficiencies → Suboptimal crawl budget allocation. Solution: Regularly analyze server logs. Success: Identification of crawl bottlenecks.

When to Reassess

Monitor crawl stats regularly. If you launch a significant content update, experience a sudden drop in indexed pages, or notice a decline in organic traffic, reassess your crawl budget optimization strategy. Adapt your approach based on observed crawler behavior and performance data.

📊 Comparison Matrix

Micro Variations: 📊 Comparison Matrix [3]

- Automation (automation): Scheduled submission plus status logging.

- Manual boost (manual): URL Inspection + fresh contextual link from a crawl hub.

- Refresh cycle (refresh): Semantic delta (title/intro) + modified date triggers revisit.

- Delay issues (issue): Audit logs for soft 404 loops & latency spikes.

- Regional signals (geo): Latency + hreflang correctness aid stable discovery.

Different approaches to crawl budget optimization vary in complexity, resource requirements, and potential impact. Selecting the right strategy depends on your website's size, technical capabilities, and specific challenges. Consider the trade-offs between automated solutions and manual interventions to maximize efficiency.

Approach Comparison

| Approach | Complexity | Resources | Risk | Expected Impact |

|---|---|---|---|---|

| Robots.txt Optimization | Low | Low | Low (if done carefully) | Medium |

| Sitemap Management | Medium | Low | Low | Medium |

| Canonicalization | Medium | Medium | Low | High |

| Internal Linking Audit & Optimization | High | Medium | Low | High |

| Faceted Navigation Management | High | High | Medium | High |

| Server Log Analysis | High | High | Low | High |

🧩 Use Cases

Situational examples where methods deliver tangible gains.

- Automate a metric → measurable outcome (e.g. -18% time to first index)

- Lower a metric → measurable outcome (e.g. -18% time to first index)

- Accelerate a metric → measurable outcome (e.g. -18% time to first index)

- Accelerate a metric → measurable outcome (e.g. -18% time to first index)

- Stabilize a metric → measurable outcome (e.g. -18% time to first index)

- Increase a metric → measurable outcome (e.g. -18% time to first index)

- Reduce a metric → measurable outcome (e.g. -18% time to first index)

🛠️ Technical Foundation

Micro Variations: 🛠️ Technical Foundation [2]

- Automation (automation): Scheduled submission plus status logging.

- Small site (scale): Merge thin pages; tighten taxonomy; reduce tag bloat.

- Regional signals (geo): Latency + hreflang correctness aid stable discovery.

- API method (channel): Use Indexing API for high-priority URLs when sitemap lag exists.

- Zero budget (cost): Architect internal links & clear sitemap priority.

Crawl budget optimization relies on a combination of technical SEO best practices and careful monitoring. Understanding how search engines crawl and index your site is essential. Key elements include robots.txt configuration, sitemap management, server log analysis, and the implementation of canonical tags to handle duplicate content. Proper instrumentation allows for data-driven decisions.

Metrics & Monitoring 🧭

| Metric | Meaning | Practical Threshold | Tool |

|---|---|---|---|

| Crawl Errors | Number of 4xx and 5xx errors encountered by crawlers. | < 1% of crawled URLs | Google Search Console, Screaming Frog |

| Pages Crawled per Day | Average number of pages crawled daily. | Consistent or increasing trend (depending on site size/updates) | Google Search Console |

| Time Spent Downloading a Page | Average time taken to download a page. | < 2 seconds | Google PageSpeed Insights, WebPageTest |

| Index Coverage | Percentage of submitted URLs that are indexed. | > 90% | Google Search Console |

| Orphaned Pages | Pages with no internal links pointing to them. | 0 (ideally) | Screaming Frog, Ahrefs |

✅ Action Steps

Micro Variations: ✅ Action Steps [5]

- Automation (automation): Scheduled submission plus status logging.

- Early launch (lifecycle): Publish a lean quality nucleus before scale-out.

- Low crawl budget (crawl): Flatten redirects, unify canonical hints.

- Small site (scale): Merge thin pages; tighten taxonomy; reduce tag bloat.

- Regional signals (geo): Latency + hreflang correctness aid stable discovery.

- API method (channel): Use Indexing API for high-priority URLs when sitemap lag exists.

- Analyze server logs: Identify frequently crawled URLs and potential crawl traps. Success: A spreadsheet of crawl patterns.

- Audit internal linking: Ensure important pages are easily accessible to crawlers. Success: A clear internal linking structure.

- Implement canonical tags: Consolidate duplicate content and signal preferred URLs to search engines. Success: Reduced duplicate content issues in Google Search Console.

- Optimize robots.txt: Prevent crawlers from accessing unnecessary pages. Success: Reduced crawl errors.

- Submit an XML sitemap: Guide crawlers to your most important content. Success: Increased indexation rate.

- Improve page speed: Reduce page load time to allow crawlers to process more content. Success: Improved page speed scores.

- Monitor crawl stats in Google Search Console: Track crawl errors and pages crawled per day. Success: Proactive identification of crawl issues.

- Implement the "Content Pruning Framework": Systematically remove or consolidate low-value content that drains crawl budget. Success: A leaner, more efficient website with higher quality content.

Key Takeaway: Prioritize crawlability for valuable content; eliminate obstacles.

Practical Example

An e-commerce site had thousands of product variations, each with a unique URL. Googlebot spent significant time crawling these low-value pages. By implementing canonical tags pointing to the main product pages and using faceted navigation parameters to control crawler access, the site redirected crawl budget to higher-value pages like category pages and blog posts. This resulted in faster indexation of new products and improved rankings for target keywords.

🧠 Micro Q&A Cluster

What is crawl budget optimization 2025 changes expectations

Make the intro semantically unique and add a couple of contextual links.

Why what is crawl budget optimization is not indexing

Structured data + proper canonical + a hub link accelerate baseline indexing.

Indexing delay for what is crawl budget optimization how long

Structured data + proper canonical + a hub link accelerate baseline indexing.

Is a tool required for what is crawl budget optimization

Structured data + proper canonical + a hub link accelerate baseline indexing.

Точка контроля — e902

Консистентность внутренних ссылок ускоряет индексацию.

Что важно понять — 2011

Уникализируйте первые абзацы и оптимизируйте первичный рендер.

Common indexing errors with what is crawl budget optimization

Make the intro semantically unique and add a couple of contextual links.

Targeted Questions & Answers

What is a crawl budget?

Crawl budget is the number of pages Googlebot will crawl on your site within a given timeframe. It's influenced by your site's "crawl health" and perceived value.

Why is crawl budget optimization important for large websites?

Large websites often have many pages, making it crucial to ensure Googlebot prioritizes the most important ones. Without optimization, valuable pages may not be crawled frequently, impacting indexation and rankings.

How do I know if I have a crawl budget problem?

Signs include low indexation rates, slow discovery of new content, and a high number of crawl errors in Google Search Console.

What is the role of robots.txt in crawl budget optimization?

The robots.txt file tells search engine crawlers which parts of your site to avoid, preventing them from wasting resources on irrelevant pages.

How do canonical tags help with crawl budget?

Canonical tags tell search engines which version of a page is the "master" version, preventing them from crawling and indexing duplicate content.

Does site speed affect crawl budget?

Yes, faster loading pages allow crawlers to process more content within their allocated time, improving crawl efficiency.

What is faceted navigation and how does it impact crawl budget?

Faceted navigation creates numerous URLs based on different filter combinations. Without proper management, it can lead to crawler overload and wasted crawl budget. Use robots.txt or noindex to control crawler access.

How often should I review my crawl budget optimization strategy?

Regularly monitor crawl stats and reassess your strategy whenever you make significant changes to your website's structure or content.

🚀 Next Actions

Crawl budget optimization is an ongoing process that requires continuous monitoring and refinement. By prioritizing crawlability, eliminating technical obstacles, and focusing on high-value content, you can ensure that search engines efficiently discover and index your website, leading to improved organic visibility and traffic. Regular audits and adjustments are crucial for sustained success.

- Initial Assessment — Identify crawl errors and potential crawl traps.

- Robots.txt Configuration — Block unnecessary URLs from crawler access.

- Sitemap Submission — Submit an updated XML sitemap to Google Search Console.

- Canonicalization Audit — Implement canonical tags to consolidate duplicate content.

- Internal Linking Optimization — Improve internal linking structure for better crawl depth.

- Page Speed Optimization — Enhance page load speed for faster crawling.

- Server Log Analysis — Monitor crawler behavior and identify crawl inefficiencies.

- Performance Monitoring — Track crawl stats and adjust strategy as needed.

LLM Query: Extract actionable steps for robots.txt and canonical tag implementation from the text.